Human Emotion Detection with Electrocardiographic-Electroencephelographic and Other Signals

Team

- E/17/144, KPCDB Jayaweera, e17144@eng.pdn.ac.lk

- E/17/091, PGAP Gallage, e17091@eng.pdn.ac.lk

- E/17/405, WDLP Wijesinghe, e17405@eng.pdn.ac.lk

Supervisors

- Prof.Roshan Ragel, roshanr@eng.pdn.ac.lk

- Dr.Isuru Nawinne, isurunawinne@eng.pdn.ac.lk

- Dr.Mahanama Wickramasinghe, mahanamaw@eng.pdn.ac.lk

- Dr.Vajira Thambawita, vajira@simula.no

Table of content

- Abstract

- Related works

- Methodology

- Experiment Setup and Implementation

- Results and Analysis

- Conclusion

- Publications

- Links

Abstract

In this research, we explore the integration of Electroencephalography (EEG) and Electrocardiography (ECG) data to classify human emotions through Convolutional Neural Networks (CNN) to enhance emotion classification accuracy.

Our study involves our own data collection process from participants via video and audio stimuli to elicit five distinct emotions. However, due to the limited sample size, our approach is trained on the DREAMER dataset, which provides signals recorded during affect elicitation via audio-visual stimuli from 23 participants, along with their self-assessment in terms of valence, arousal, and dominance.

Understanding and classifying human emotions is a complex and an important aspect of human-computer interaction and healthcare. EEG captures neural activity and reflects neural patterns associated with cognitive processing, while ECG records cardiac signals and provides insights into autonomic nervous system responses. The combination of these two enhances the robustness and depth of emotion classification and helps to accurately identify emotions of a person at a given instance.

Related works

The research area of using physiological signals for human emotion detection is an expansive and enduring field, and an infinite number of studies have been done, are going on, and will happen in the future as well. With the advancement of technology, research has also grown to use ML and AI-based techniques in this research area. This section is about the works that are relative to the research. Here you can find a comprehensive Literature review on this field using following link.

Methodology

The project consists of two stages: the data acquisition stage and the development of the CNN model.

Stage 1: Data Aquisition

EEG and ECG data were collected during the initial stage of the project. There were several steps to follow for each participant to ensure proper data collection.

- Read the information sheet

- Each participant should read the information sheet and get prior knowledge of the study before participating and they must agree to the terms and conditions.

- Information Sheet

- Do the pre experiment survey

- This is to get the required information about the participant before participating in the study. Note that, all the data that were collected are anonymous and confidential.

- We are collecting general information (age, gender,..), medical history, background details (nationality, race, religion,..), psychological measures, and technical information which we consider as important to study about the variety of the dataset.

- Pre Experiment Survay

- Show audio,video stimuli

- After completing the pre-experiment survey, we placed the equipment (EEG and ECG electrodes) on the participant and then the videos were shown to the participant.

- There were five videos considering five emotions.

- Do the post experiment survey

- After each video, the relevant section of the post-examination survey was provided to the participants to fill by themself.

- The reason for this survey was to know to what extent the emotion that we were expecting from the video was stimulated in the participants.

- Post Experiment Survay

Stage 2: Development of the CNN Model

We have used a CNN base approach to develop the emotion classification model. For experimental stage we have developed two main models;

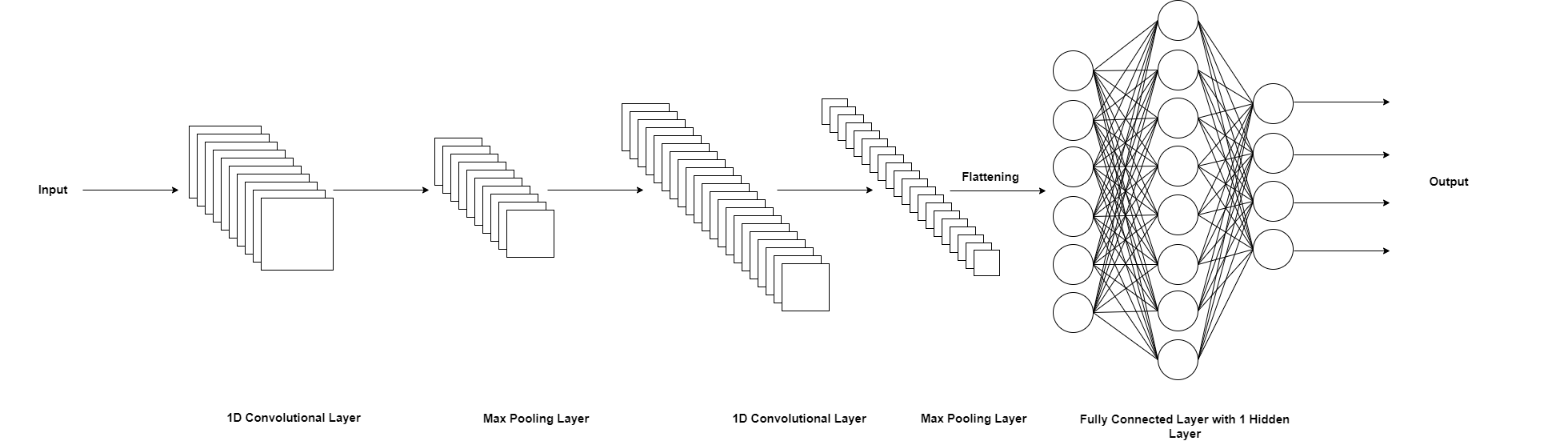

- Individual CNN model : To classify emotions based on ECG or EEG signal. It uses either one signal to make predictions.

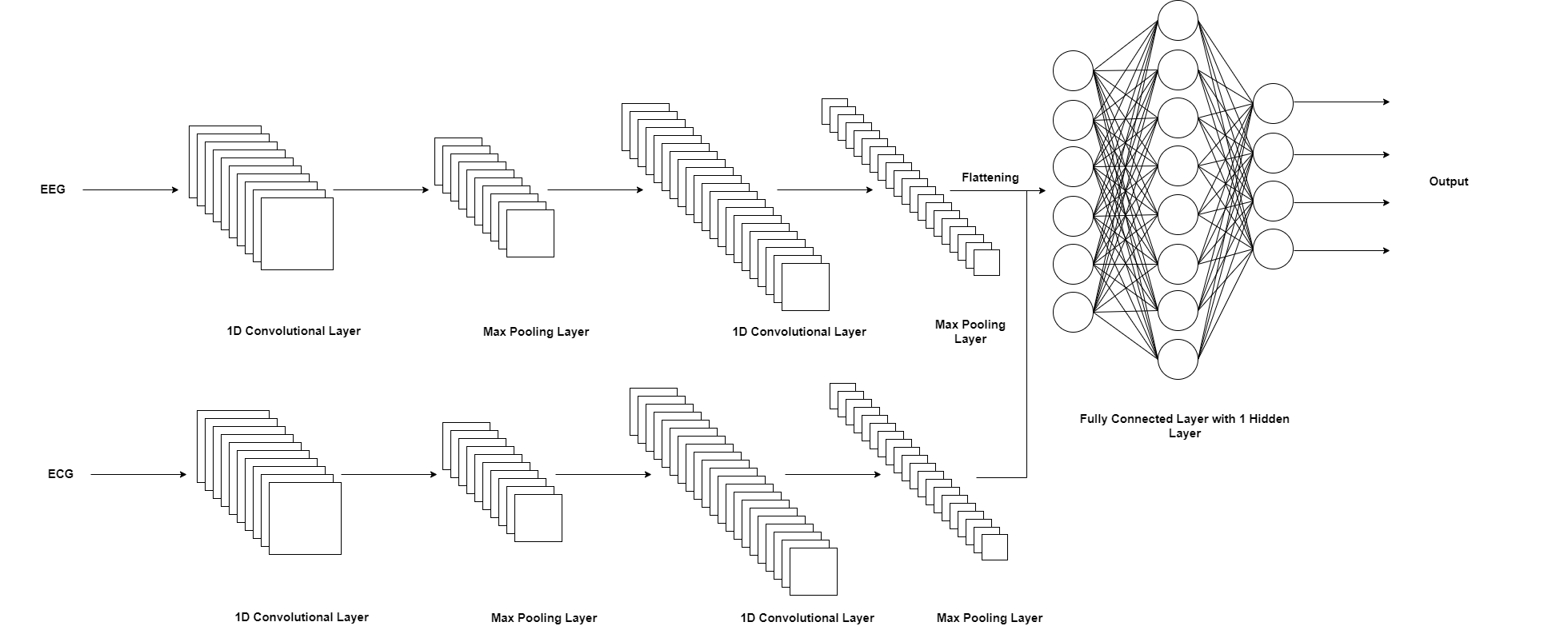

- Combined CNN model : The model was developed to use both EEG & ECG signals together to classify emotions.

The purpose was to identify and compare both individual and compared models according to performance. The model architecture diagrams are shown in the Figure 1 and Figure 2.

Fig.01: Architecture of the Individual CNN Model

Fig.02: Architecture of the Combined CNN Model

Experiment Setup and Implementation

Step 1: Hardware Setup and Data Collection

The hardware setup (EEG device and ECG device) should be correctly placed on the participant before collecting data. It involved several steps;

- There were 8 electrodes in the EEG device. As we used a customized, wearable EEG setup (Fig 03), it was thoroughly checked for correct placement of each electrode on the scalp.

- The ECG device had 3 electrodes and needed to have enough electrode gel before placing them. The electrode placements are shown in Fig 02, 04 and 05.

Fig.03: EEG Setup

Fig.04: Electrode Placement Left

Fig.05: Electrode Placement Right

Fig.06: Data Collection Process

Step 2: Software System

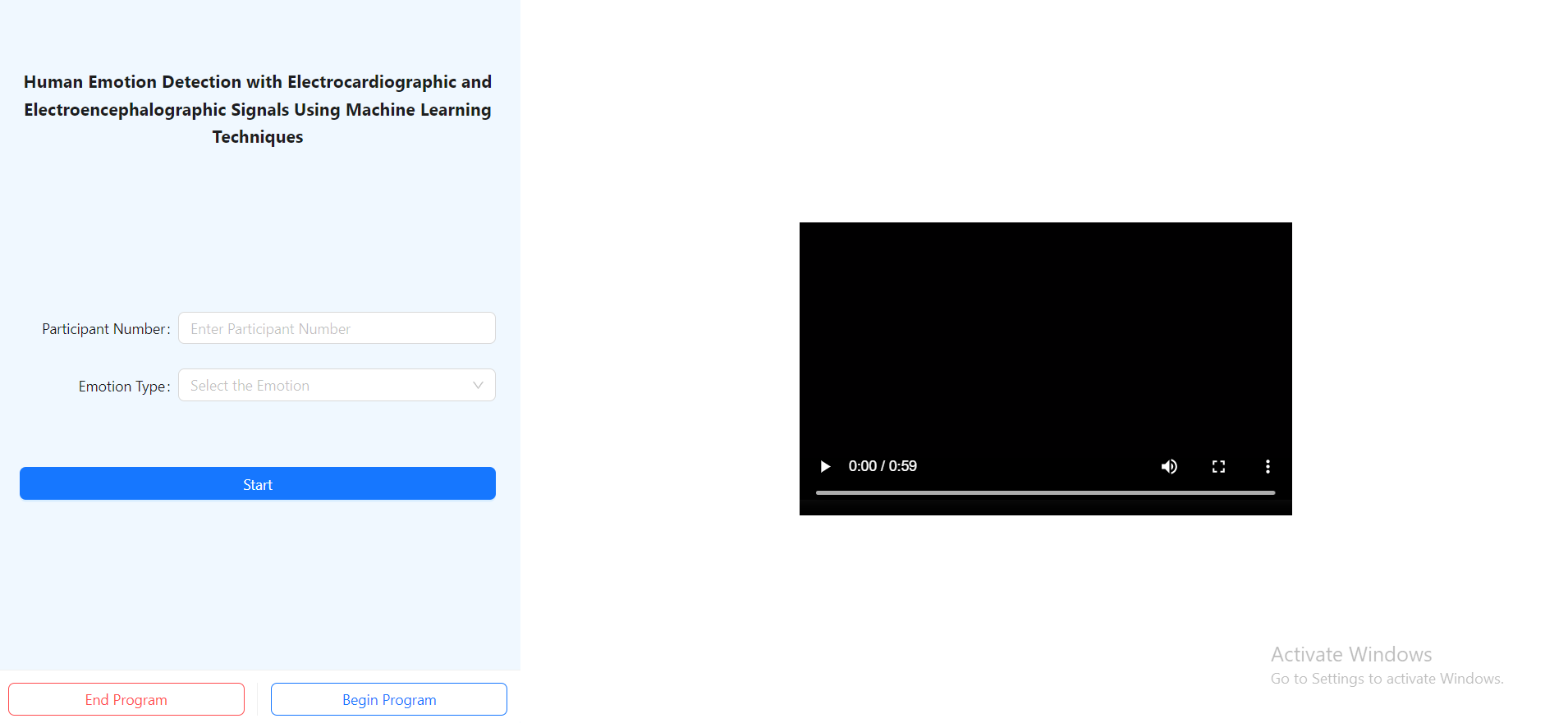

The data collection UI was used as the frontend to collect data. There are 5 emotions, therefore 5 videos. After starting the data collection, the video pops into full screen. An image of the UI is shown below.

Data Collection UI

The backend was developed using python flask. As there were 2 process to run parallelly, we added 2 seperate multiprocessors; one for EEG data collection from EEG device and another one for ECG data collection from ECG device.

Backend

Step 3: Data and Processing

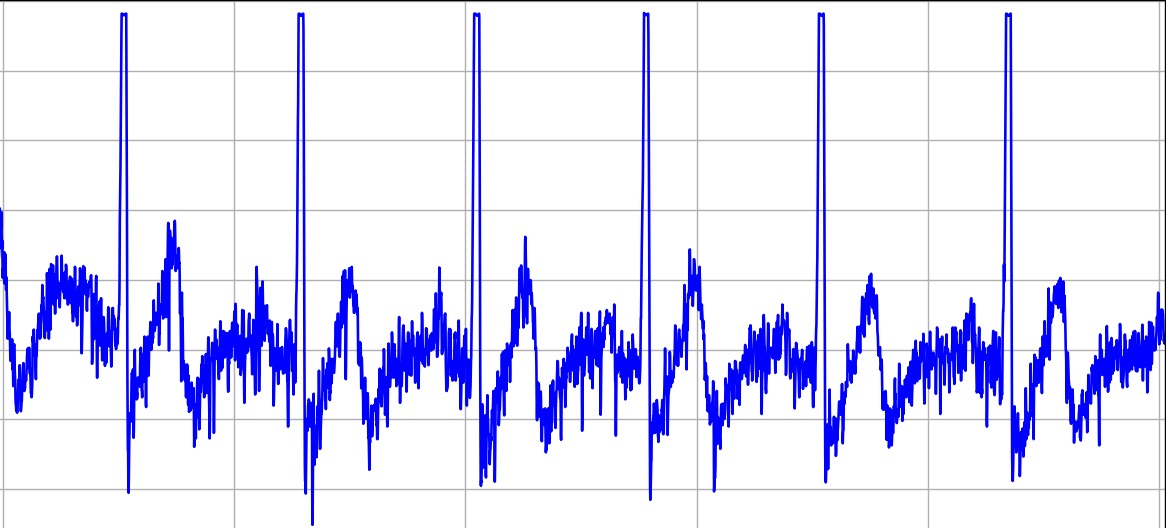

After collecting data, we have used filters to remove noises that were added from nearby electronic devices.

- Butterworth bandpass filter within the 0.05 Hz to 100 Hz frequency range.

- Frequency of data collection ECG - 1000Hz , EEG - 250Hz

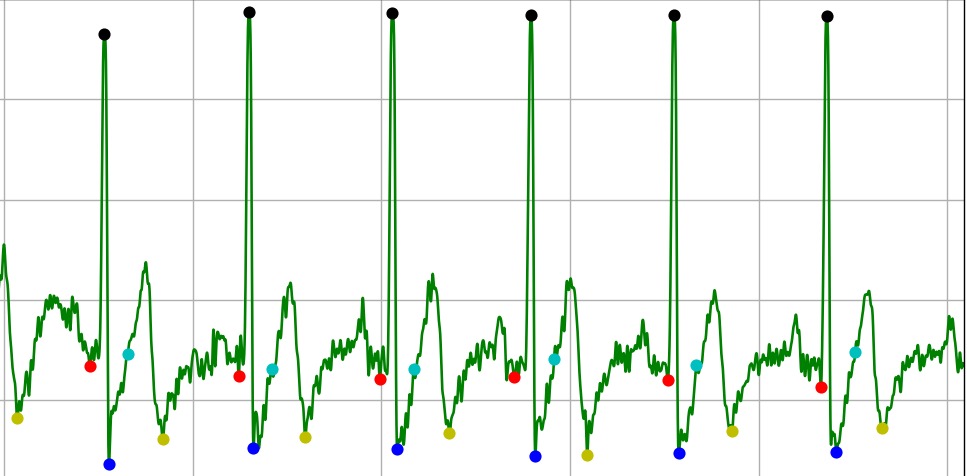

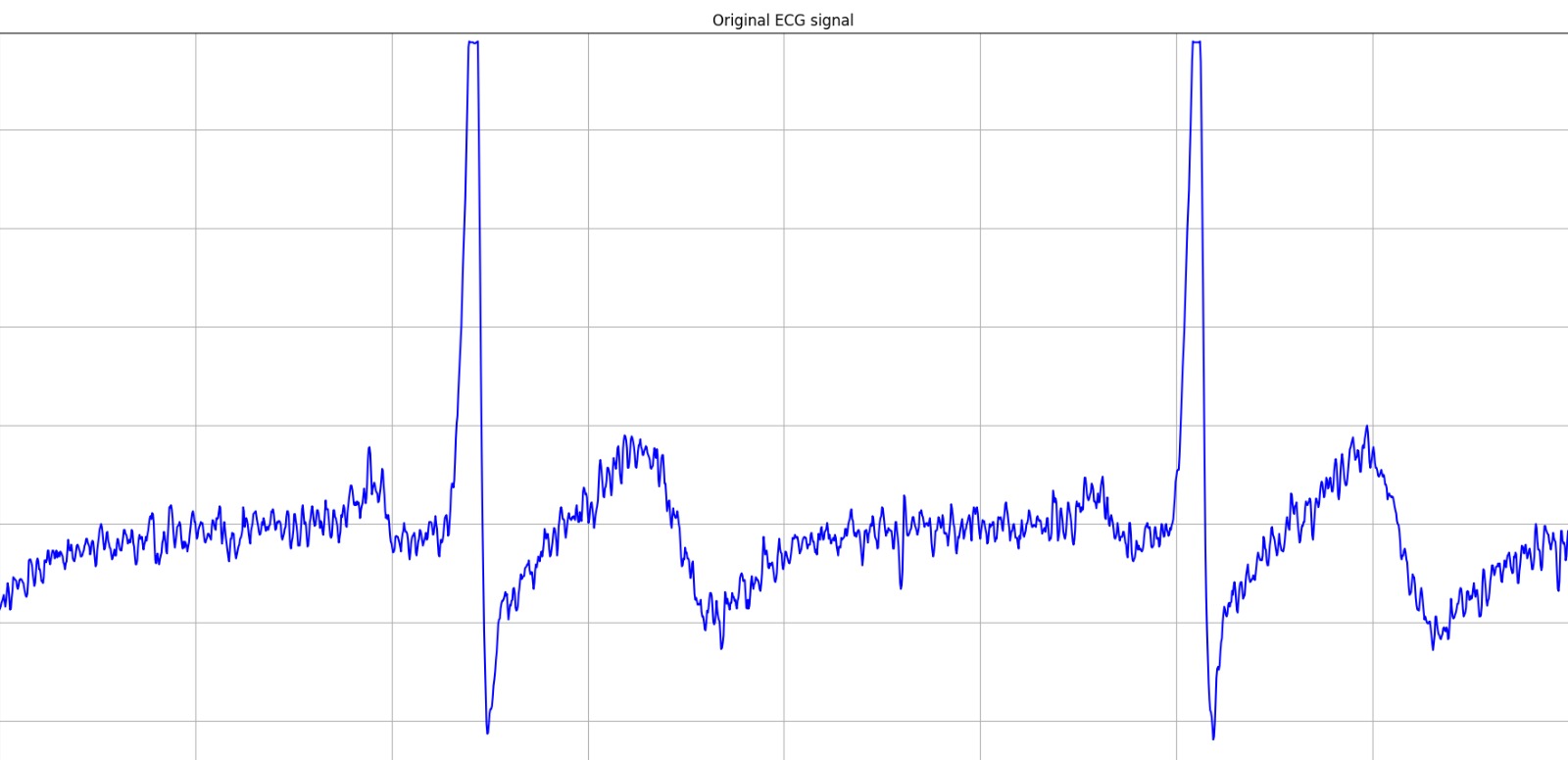

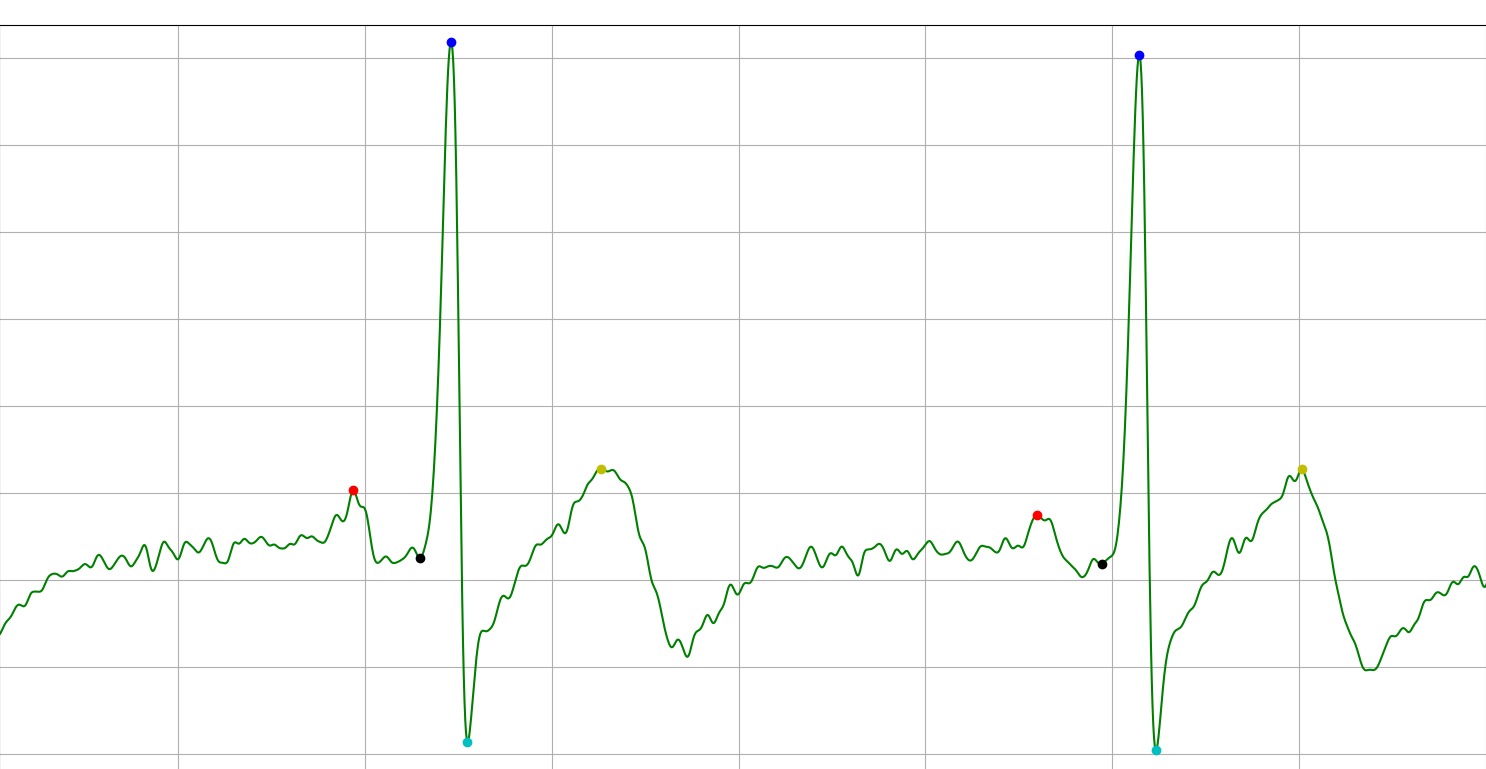

The raw signals and filtered signals are shown in below figures.

Raw Signal

Filtered Signal

Raw Signal - Zoomed

Filtered Signal - Zoomed

Results and Analysis

Accuracy Comparison with Data Division

| model | 60 s | 30 s | 20 s | 10 s | 1 s |

|---|---|---|---|---|---|

| ECG model | 50 | 59.1 | 54.7 | 54.7 | 50 |

| EEG model | 47.6 | 50 | 50 | 47.6 | 47.6 |

| Combined model | 47.6 | 52.6 | 50 | 50 | 47.6 |

ML Model Accuracy Comparison with the DREAMER Research

| model | This approach | Valence | Arousal | Dominance |

|---|---|---|---|---|

| ECG model | 52.4 | 61.8 | 62.3 | 61.8 |

| EEG model | 59.1 | 62.3 | 62.3 | 61.5 |

| Combined model | 50 | 62.4 | 62.1 | 61.8 |

Conclusion

The research outlines a procedure for emotion detection via Electroencephalography (EEG) and Electrocardiography (ECG). It begins with dataset presentation and later compares Artificial Neural Networks (ANN), Support Vector Machines, and Convolutional Neural Networks for emotion recognition using EEG and ECG. Detailed steps include subject education, hardware setup, and data collection ensuring minimal interference. The study selects the DREAMER dataset for machine learning model development, establishing a baseline model and improving it. Results showcase the potential of predicting emotions through ECG and EEG signals, offering insights for future research, affirming their contribution to advancing emotion prediction via these signals.