Project Title

Team

- E/16/069, De Silva M.D.S., email

- E/16/070, De Silva N.S.C.K.S., email

- E/16/232, Marzook F.S. , email

Supervisors

- Prof. Roshan Ragel, email

- Dr. Isuru Nawinne, email

- Dr. Mahanama Wickramasinghe, email

- Dr. Suranji Wijekoon, email

- Mr. Theekshana Dissanayake, email

Table of content

Abstract

Different kinds of machine learning models have recently been used by researchers in the field of biosensor-based human emotion recognition to identify human emotions. With only a few bio-sensors integrated, the majority of them still lack the capacity to identify human emotions with greater classification accuracy as well as the ability to identify many emotions. Convolutional neural networks have been successfully used in the machine learning field to address a variety of practical machine learning issues requiring increased classification accuracy. To emphasize that, this study proposes a deep learning method using convolutional neural networks to implement a model using electrocardiogram (ECG) signals. Using 25 subjects, data was collected using video and audio as the emotion elicitation technique to create a dataset that consists data of nine emotions which were chosen from the valence-arousal-dominance 3D emotional model. This dataset was preprocessed and prepared to train and test the 2D CNN models. Three 2D CNN classifiers are implemented in this study. A valence based emotion classification model, an arousal based emotion classification model and a nine emotion classification model to predict the valence, arousal and the dominance values of the ECG signal and map those values to the valence-arousal-dominance 3D plane and predict the emotion. The valence based emotion classification model resulted in an accuracy of 70%, the arousal based emotion classification model resulted in an accuracy of 65% and the nine emotion classification model an accuracy of 40%. Furthermore, we explored the possibility of using the valence based emotion classification model to predict emotions of animals, specifically the dogs and were able to predict results with an accuracy of 32%.

Methodology

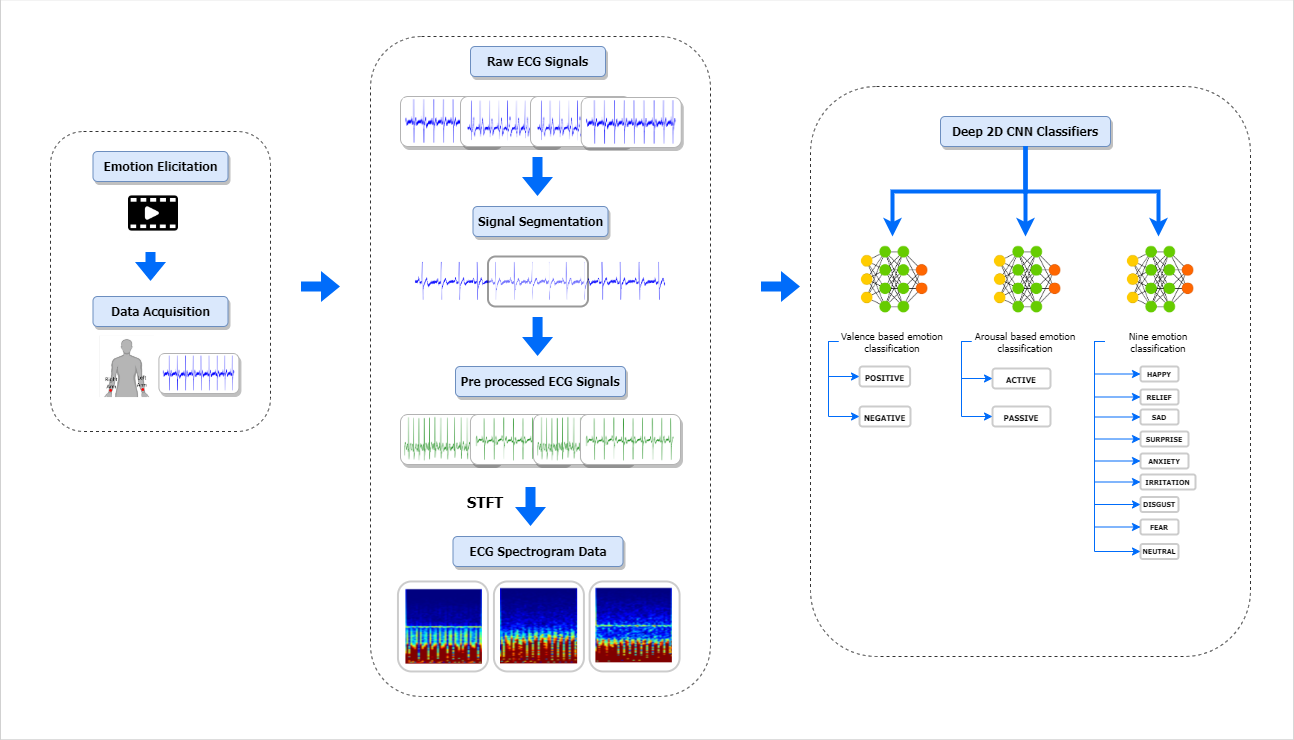

Figure 01: Methodology Overview

The overall procedure of the proposed emotion recognition model is shown in Figure 01. The ECG signals related to selected nine different emotions were collected by following an experimental setup. Emotions were elicited using short video clips selected from movies. To record the ECG signals the spiker shield heart and brain sensor were used together with the web application developed to automate the data collection process. The collected ECG signals were divided into identical durations of 20 seconds. After that, the segmented raw ECG signals were pr processed using different techniques to remove noises and transform them into normal form. Afterward, each ECG signal record was transformed into an image of a time-frequency spectrogram using the short-time Fourier transform (STFT). The ECG spectrogram images were fed into proposed deep two-dimensional convolutional neural network (CNN) models.

Based on the two-dimensional emotional model collected, ECG Signals were categorized in three ways. First, categorization was done based on distinct emotions. In that categorization, signals were categorized into nine classes. The second categorization was done based on the valance of each emotion. In that case, ECG signals were categorized into two classes, positive and negative. The third categorization was done based on the arousal of emotions and ECG Signals were categorized into two classes, active and passive. A deep two-dimensional convolutional neural network (CNN) model was developed for the three categorization methods mentioned above.

As an extension to the research, the possibility of recognizing animal emotions using the emotion recognition model developed using human data was explored. For that, the model based on the valence was used. Dogs were selected to experiment.

Experiment Setup

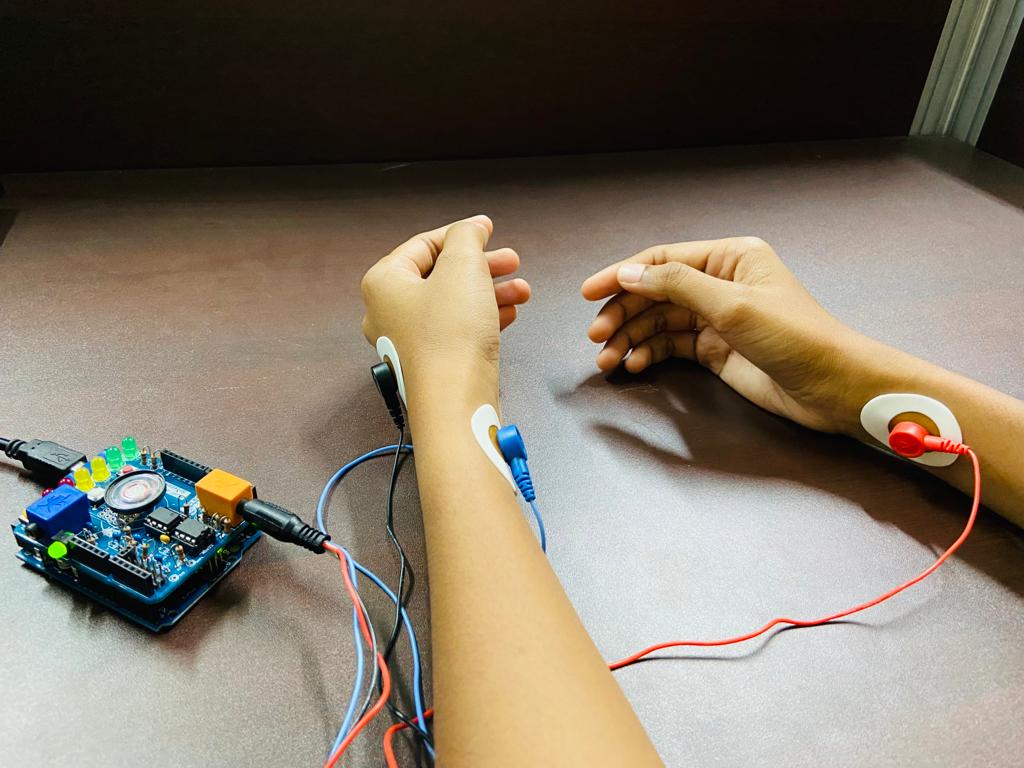

Figure 02: Electrode Placement in Human

Figure 03: Experimental Setup of Human

Figure 04: Electrode Placement in Animal

Figure 05: Experimental Setup of Animal