Features

Reference

Gesture Recognition

Using wearable-based TinyML gesture recognition, users can control devices with intuitive hand gestures for a seamless, hands-free smart home experience.

System Architecture

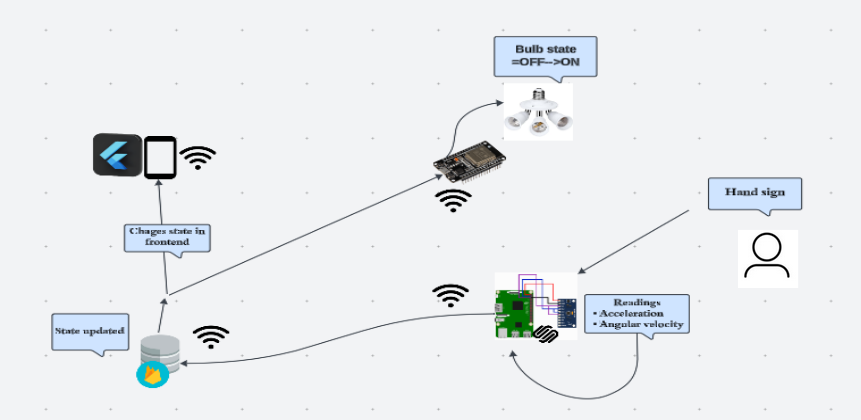

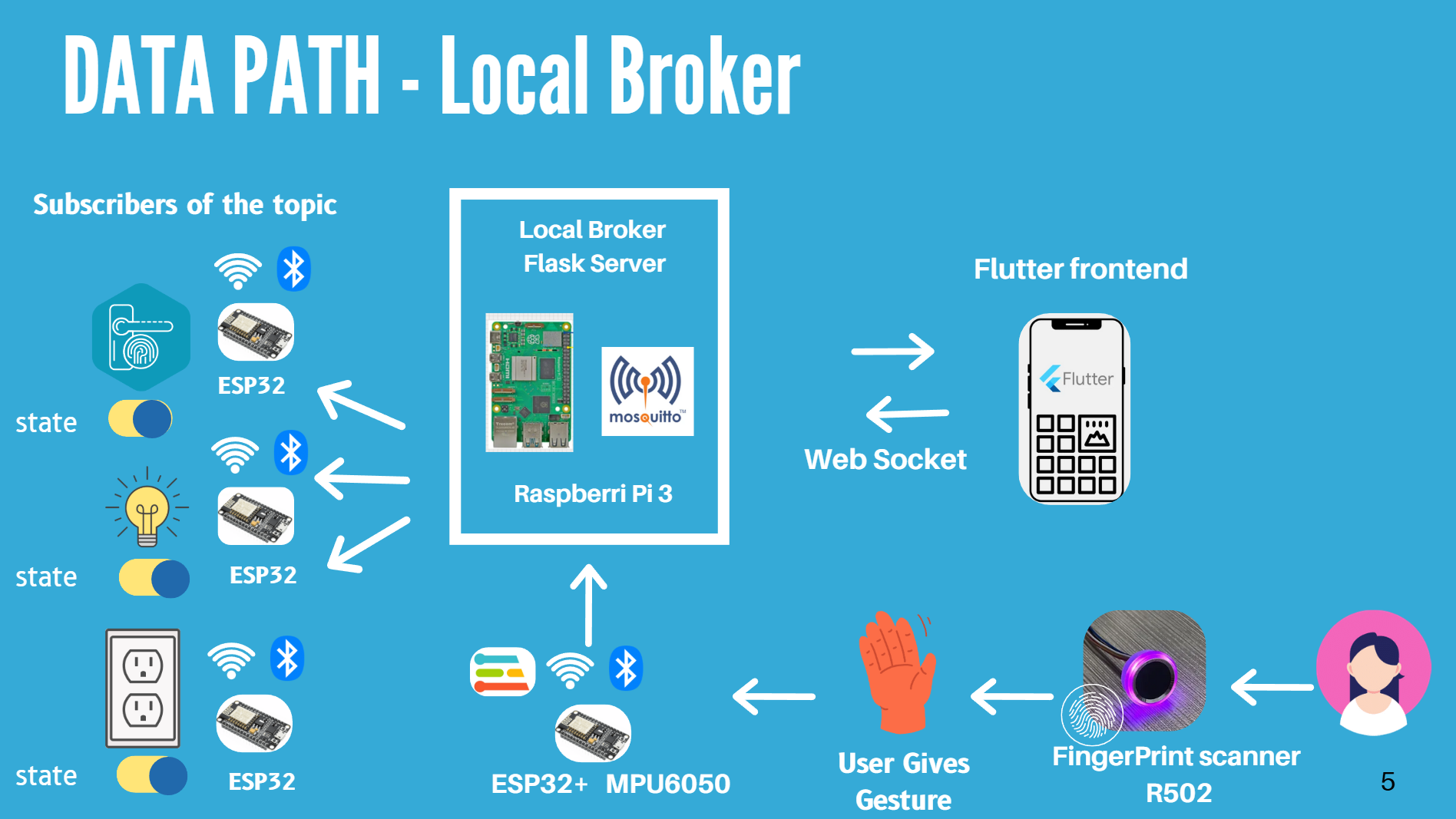

Our architecture supports both cloud-based and local communication for enhanced flexibility and reliability:

- ESP32 devices with MPU6050 and fingerprint sensors classify gestures using Edge Impulse TinyML models.

- Classified gestures are published via MQTT to either AWS IoT Core or a Mosquitto broker hosted on a Raspberry Pi 3.

- The Raspberry Pi also runs a Flask server to simulate cloud services for offline operation.

- Smart devices subscribed to MQTT topics respond instantly to gesture commands.

- AWS IoT triggers Lambda functions to update Firebase, while GCP Firebase Functions sync mobile-triggered changes to MQTT.

- The Flutter mobile app reflects real-time device states using Firebase listeners.

System diagrams for both configurations:

Key Features

- Hands-free gesture control via TinyML.

- Local and cloud MQTT fallback communication.

- Fingerprint and gesture-based authentication.

- Real-time updates through Firebase and Flutter app UI.

Hardware Components

| Component | Description |

|---|---|

| ESP32 Dev Module | Processes sensor data, runs the Edge Impulse ML model, and communicates with AWS IoT Core via MQTT. |

| MPU6050 Sensor | Captures precise motion data for gesture recognition. |

| Finger Print Door lock | Enables secure fingerprint-based door unlocking. |

| Smart Sockets & Light Modules | Controlled via ESP32 to automate appliances and lighting based on gesture commands. |

| Fingerprint scanner R502 | Authenticate the user by fingerprint in the wrist band. |

Software Components

| Component | Description |

|---|---|

| Edge Impulse ML Model | Classifies gestures in real-time on the ESP32 microcontroller. |

| MQTT Communication | Enables reliable message delivery between ESP32 and AWS IoT Core. |

| AWS IoT Core | Acts as the central MQTT broker for managing device commands. |

| Firebase | Provides real-time database updates and role-based access control (RBAC). |

| Flutter Mobile App | Displays real-time device status, logs, and gesture configuration settings. |

| GCP Function | Catch the firebase triggers done by mobile app and publish them to IOT core. |

System Workflow

- Gesture is captured and classified by ESP32.

- MQTT message is sent to cloud or local broker.

- Smart device responds to the published command.

- Firebase is updated via Lambda or Flask backend.

- Mobile app updates reflect real-time status from Firebase.

Budget Breakdown

| Item | Quantity | Unit Cost (LKR) | Total (LKR) |

|---|---|---|---|

| Speed Xiao ESP32 Board | 1 | 3,200 | 3,200 |

| esp32 dev module Board | 4 | 2,400 | 9,600 |

| IMU Sensor | 1 | 1,000 | 1,000 |

| R502 Finger Print Sensors | 1 | 6,300 | 6,300 |

| Battery Pack | 1 | 200 | 200 |

| Plug Sockets | 1 | 1,000 | 1,000 |

| Electronic Door Lock | 1 | 2,500 | 2,500 |

| 230V to 5V Converters | 4 | 300 | 1,200 |

| Relays, Triacs, Resistors, etc. | 4 | 300 | 1,200 |

| Wires, Soldering Components | 1 | 3,000 | 3,000 |

| Other Expenses | 1 | 2,000 | 2,000 |

| Flexible 3d print model as Wearable Band | 1 | 1,800 | 1,800 |

| Total | 33,000 | ||

Conclusion

This design ensures a seamless, low-latency, and secure gesture-controlled home automation experience. By integrating ESP32 NodeMCU, fingerprint scanner, smart sockets, and MQTT-based communication, our system enables effortless automation and real-time device control.

Future Developments

- Integration with voice assistants like Alexa and Google Assistant.

- Expansion to support more devices and appliances.

- Enhanced machine learning models for improved gesture recognition accuracy.

Commercialization Plans

- Partnering with smart home device manufacturers for integration.

- Launching a subscription-based service for advanced features.

- Expanding to international markets with localized support.

GitHub Repository

Explore our project on GitHub: FlickNest GitHub Repo